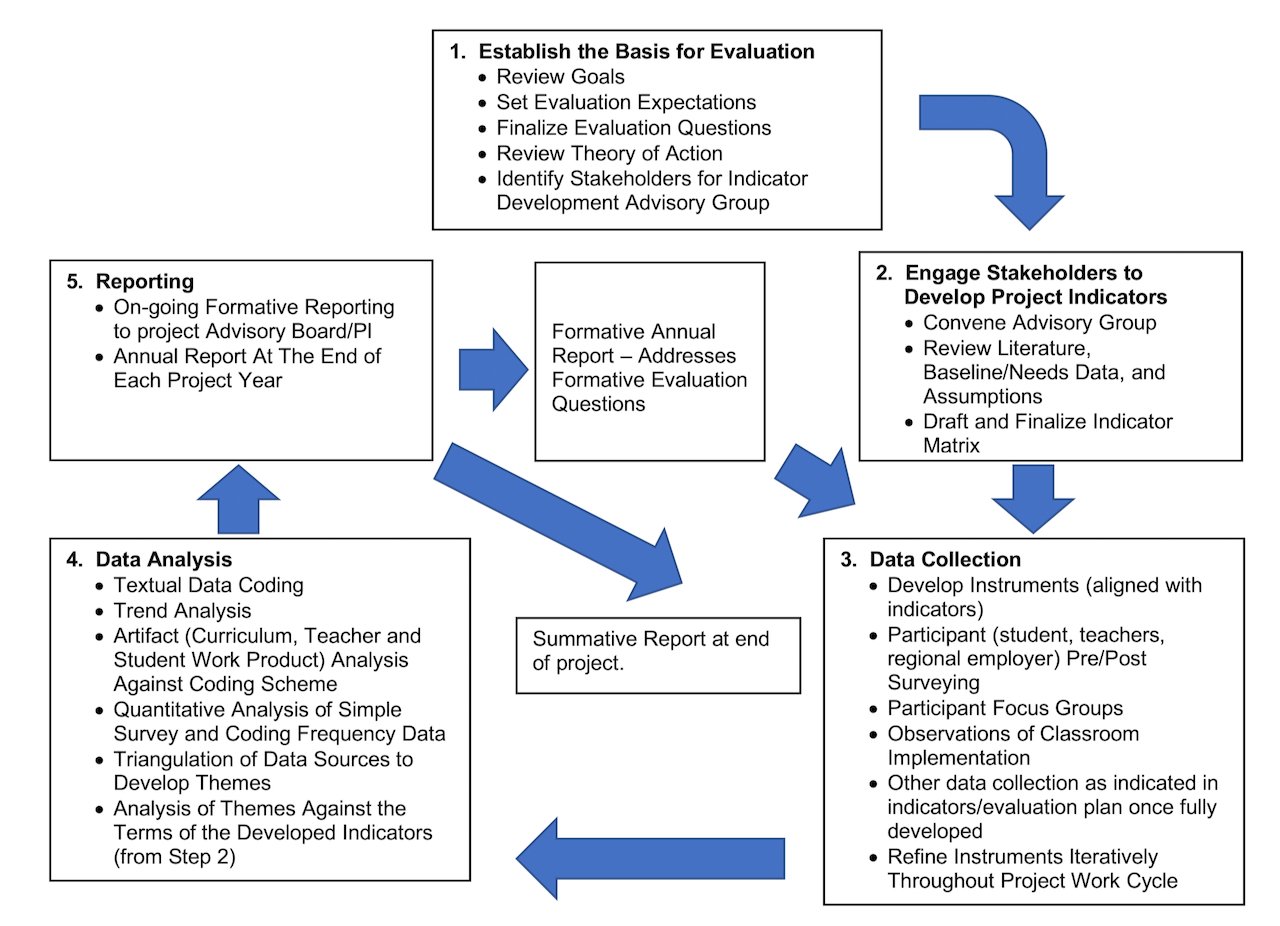

A Process Diagram of Collaborative and Participatory Evaluation

Resources for AEA 2023

Overview

A basic model for collaborative and participatory evaluation is shown above. The collaborative-participatory evaluation process emphasizes the steps numbered 1 and 2 in the diagram. Here, consensus is built around the point of the evaluation, how the evaluation will benefit the project’s community/stakeholders, and expectations for how the evaluators will engage productively with the project being evaluated.

A critical tool in Sun Associates’ approach to collaborative evaluation is the set of evaluation indicators that are developed with project stakeholders in step number 2, above. These indicators are descriptions of project performance as aligned with project goals and framed by the project’s evaluation questions. Indicators are literally descriptions of what stakeholders will see, count, understand, and “do” as the project is meeting its determined goals. Indicators implicitly include the metrics that a project - through its evaluation - will measure related to project outcomes. Importantly, indicators for all Sun Associates projects (and this should be the case with any truly comprehensive collaborative evaluation) contain a mixture of qualitative as well as quantitative metrics. An example set of indicators can be viewed here.

In Sun Associates’ approach to collaborative-participatory evaluation, data collection (step 3, above) is always driven by the project’s indicators. Adherence to this principle serves to focus data collection on those activities and project processes are absolutely meaningful to the evaluation at-hand. Focusing data collection is a way of honoring the time and resource limitations present with any project and its participants. Nevertheless, our strongly qualitative approach to data (focus groups, interviews, observations) helps ensure that participants in the evaluation have a strong, authentic, “voice” and that their experiences are not readily or too-swiftly reduced to numbers, graphs, and trends.

Data analysis and reporting (steps 4 and 5, above) highlight the unique expertise of the external program evaluator. Here, the collected data is analyzed against the project’s indicators and reports are generated. In a Sun Associates evaluation, reporting is always formative. Central to the usefulness of a collaborative-participatory evaluation is the fact that evaluation finding are continually reported to the project and its stakeholders so that the project may engage productively in a process of continual improvement. Summative reporting is of course provided to meet the requirements of project funders and also to empower projects with the information, recommendations, and strategies useful for sustaining their project work post-external-funding.

Citations

There is an extensive literature around collaborative and participatory approaches to program evaluation. The following are a few that we have found useful. The NSF documents, while not exactly research articles on methodology, do provide guidelines for approaches to program evaluation that align with Sun Associates’ collaborative-participatory approaches. Feel free to contact us for further sources and information.

Brackett A. & Hurley N. (2004) Collaborative Evaluation Led by Local Educators: A Practical, Print- and Web-Based Guide. WestEd. https://www.wested.org/wp-content/uploads/2016/11/1374814340li0401-3.pdf

Cousins J.B. & Whitmore E. (1998) Framing Participatory Evaluation. New Directions for Evaluation. Vol 80 5 - 22

National Science Foundation (2010) The 2010 User-Friendly Handbook for Project Evaluation. https://evalu-ate.org/external-resource/doc-2010-nsfhandbook/

National Science Foundation (2013) Common Guidelines for Evaluation Research and Development. https://www.nsf.gov/pubs/2013/nsf13126/nsf13126.pdf

O'Sullivan, R. (2012). Collaborative Evaluation within a framework of stakeholder-oriented evaluation approaches. Evaluation and program planning. 35. 518-22.